I always have considered the Continuous Delivery (CD) for Database world as one of the last frontiers to cross, it is not easy at all and the DevOps guys are brave witnesses about it, however, I consider that it is an aim which can be reachable with a mix of effort, compromise and obviously investment (time and money).

The previous aspects mentioned above are from my point of view the most valuable, but we also need to be realistic about in which stage of this process our companies are, I mean, it is not realistic or fair to pretend that a new startup consider spending many resources for implementing DB deployment under

CD vision, but if the team only requires to deploy through a few environments it can be feasible to use a simplistic approach based on using PowerShell.

In this article I will use Microsoft SQL Server as DB Engine and PowerShell, the main task will consist in getting a list of articles from Products table and export to CSV file with a tabular delimiter. I used to work with PowerShell ISE, but you can create and edit the PowerShell file even in Notepad, at the end the most important part is to be comfortable with the IDE chosen.

We should start turn on the cmdlet-style parameter, I would like to recommend this interesting link about this statement:

https://www.itprotoday.com/management-mobility/what-does-powershells-cmdletbinding-do

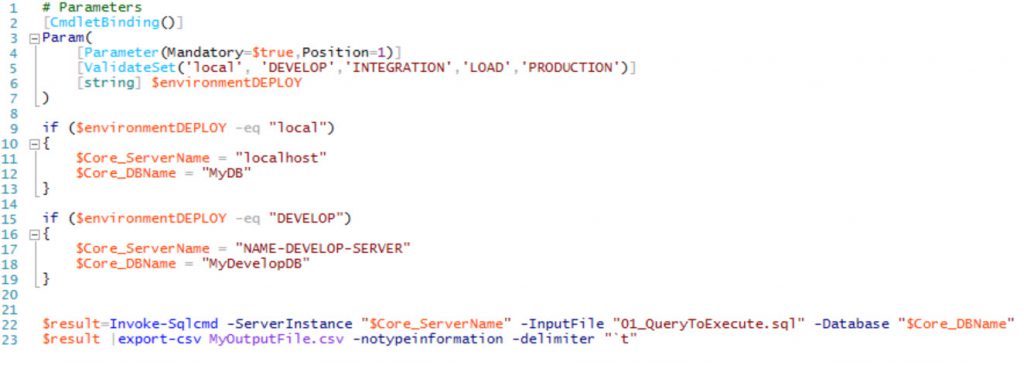

Basically, when we put on the top of our file we are enabled to start using common parameters and becoming our function in a new advanced function. The next step consists in write the sentences for requesting a specific parameter which should match with a predefined list of environments, this parameter is mandatory and correspondence to position one, next statement is the validation of input parameter and is part of advance parameter which allows us to validate or constraint the value supplied by user, and finally it is assigned to $environmentDEPLOY variable.

# Parameters

[CmdletBinding()]

Param( [Parameter(Mandatory=$true,Position=1)]

[ValidateSet('local', 'DEVELOP','INTEGRATION','LOAD','PRODUCTION')]

[string] $environmentDEPLOY )

In short words, what did we make in the above code? Enable advanced functions and capture a new parameter which has been able to validate against a list or array of string, in case that validation passed it will assign the captured value to $environmentDEPLOY variable. The next blocks will execute a group of if statement to assign the respective name of a server to a Server and DB variables.

if ($environmentDEPLOY -eq "local")

{

$Core_ServerName = "localhost"

$Core_DBName = "MyDB"

}

And for the rest of the environments you could have something like this:

if ($environmentDEPLOY -eq "DEVELOP")

{

$Core_ServerName = "NAME-DEVELOP-SERVER"

$Core_DBName = "MyDevelopDB"

}

Now, we have the logic to load Server and DB variables based on the user input. For a simple illustration, we will be using the AdventureWorks DB and we are creating a query that I hope this part would be interesting for you, it should save as SQL script and contains the query, however, it could perfectly have a group of SQL sentences and call multiples queries or stored procedures, the big advantage, in this case, is the use of the Invoke-SQLCmd cmdlet, with him we can run sqlcmd script files in Powershell.

Check the next link for getting more details about the syntax of Invoke-SQLCmd:

https://docs.microsoft.com/es-es/sql/database-engine/invoke-sqlcmd-cmdlet?view=sql-server-2014

In our case, we only need to supply the values previously assigned to Server and DB variables, if you decide to specify an input file parameter only need to add a new parameter, in this article, I am using a predefined file which must be in the same folder than PowerShell script.

$result=Invoke-Sqlcmd -ServerInstance "$Core_ServerName" -InputFile "01_QueryToExecute.sql" -Database "$Core_DBName"

After executing the query, we have a request from a user to export the result into a CSV file. You can see how many interesting cmdlets we have implemented until now, well, it is not all, we can use the cmdlet export-csv for taking the results got in the previous statement and export as CSV output file. The next statement will be the last inside the PowerShell script:

$result |export-csv MyOutputFile.csv -notypeinformation -delimiter "`t"

Reassembling all, you should have a PowerShell script would look as this:

You can download the source code from my GitHub repository, here the link:

Once time that we have completed the typing of our PowerShell script, we can run click on the play button in PowerShell ISE, it shows us the next image:

![]()

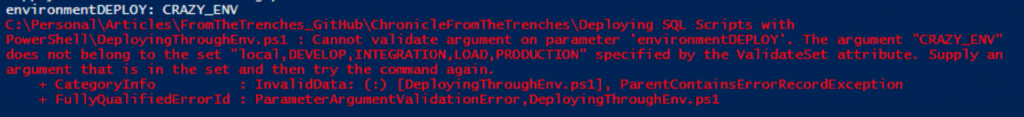

Maybe are you guessing if the validation is useful for this script, try to type any nonlogic name for the server and you should see a message like this:

Otherwise, if you supply a valid Environment input, and I refer to a value which is contained inside the list of ValidateSet statement it is going to work as expected.

Summary

As you have seen, the benefit of using this sort of mix between PowerShell and basic T-SQL for deploying through different Environment is clearly, easy and transparent. Meanwhile, you work in more sophisticated solution close to a real CD approach, the approach covered in this article allow you to have a simple and easy to adapt deploy script.

Happy Querying!!!!